[ AI Art ] - stable diffusion 討論 分享 02

武吉士

724 回覆

9 Like

8 Dislike

有無其他魔物娘? 史萊姆娘 please

睡醒

好耐之前做過嘅,依家多咗controlNet再做應該會好好多

以前都search過下個d字係咪真係有用

They work on Novel AI (NAI) model and its descendants (such as Anything, Orange Abyss), because the model is actually trained on those keywords.

They don't work on models that don't have NAI as its ancestor or are merged too much that the NAI component is diluted.

This is the answer. Almost every anime-based model has NovelAI as one of its "parents" that it was merged from, and so the keywords NovelAI was trained on have an impact when using those models.

To build on this, Waifu Diffusion has [used the same set of keywords](https://cafeai.notion.site/WD-1-5-Beta-Release-Notes-967d3a5ece054d07bb02cba02e8199b7). They used some software to assign images scraped from a booru an aesthetic score, and depending on the score gave them keywords

* masterpiece

* best quality

* high quality

* medium quality

* normal quality

* low quality

* worst quality

I believe this matches what NovelAI did, so putting "low quality" and "worst quality" in the negative prompt also helps to improve the image quality in most anime-based checkpoints.

https://reddit.com/r/StableDiffusion/s/a4HOquhGgT

They work on Novel AI (NAI) model and its descendants (such as Anything, Orange Abyss), because the model is actually trained on those keywords.

They don't work on models that don't have NAI as its ancestor or are merged too much that the NAI component is diluted.

This is the answer. Almost every anime-based model has NovelAI as one of its "parents" that it was merged from, and so the keywords NovelAI was trained on have an impact when using those models.

To build on this, Waifu Diffusion has [used the same set of keywords](https://cafeai.notion.site/WD-1-5-Beta-Release-Notes-967d3a5ece054d07bb02cba02e8199b7). They used some software to assign images scraped from a booru an aesthetic score, and depending on the score gave them keywords

* masterpiece

* best quality

* high quality

* medium quality

* normal quality

* low quality

* worst quality

I believe this matches what NovelAI did, so putting "low quality" and "worst quality" in the negative prompt also helps to improve the image quality in most anime-based checkpoints.

https://reddit.com/r/StableDiffusion/s/a4HOquhGgT

我測試過 negative prompts 最有用嘅係 worst quality

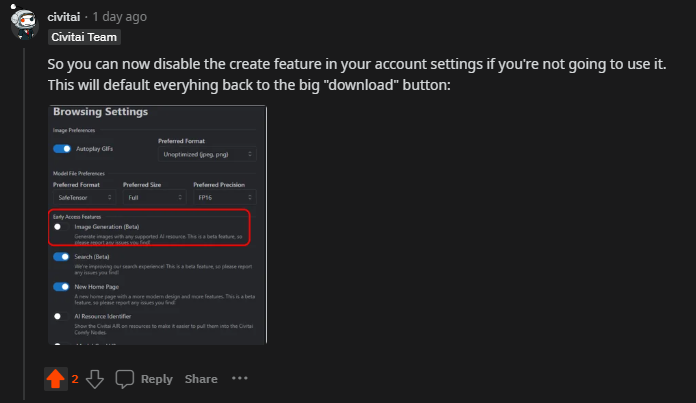

civitai最近有個白痴功能,有時見唔到個download掣, 去setting可以還原返正常

some SDXL fun

你意思係img2img upscale checkpoint都用SDXL? 未試過.

最難嘅構圖SDXL已經做咗,用其checkpoint upscale

最難嘅構圖SDXL已經做咗,用其checkpoint upscale

試下用StableSR upscale

A1111 Hotkeys:

⁍⁍ Ctrl+Enter - Generate (Right Click on "Generate" button - Generate Forever/Cancel Generate Forever).

⁍⁍ Ctrl+up/down on a highlighted Lora in the prompt box will change its value.

⁍⁍ Ctrl+up/down on a highlighted word or word group will encase it in brackets and add a weight value of :1.1 or decrease it to :0.9.

⁍⁍ Alt+left/right arrows can be used to change the order of your prompt by moving selected LoRAs or words left or right, towards the beginning or the end of your prompt. (*Words must be separated by commas for them to move with alt+up and down arrows).

⁍⁍ Escape - Closes Image Viewer.

⁍⁍ Some tips and browser shortcuts that might also be useful in A1111:

Tips:

⁍⁍ You can drag a png or a jpg to the text2image prompt box to extract the exif data into the prompt box.

⁍⁍ F11 - Full Screen/Exit Full Screen. (More real estate for A1111 on your monitor).

⁍⁍ F3 or Ctrl+F - Find in Page. (Useful to search for repeating words in those really long prompt merges).

⁍⁍ Ctrl+P - Opens Print (Save a pdf of all your prompt settings including a thumbnail).

⁍⁍ +/- on Number Pad - Zoom in/Zoom out (To read small writing in prompt boxes).

⁍⁍ Space - Goes to bottom of page.

⁍⁍ F5 or Ctrl+R - Reload A1111.

⁍⁍ Ctrl+Enter - Generate (Right Click on "Generate" button - Generate Forever/Cancel Generate Forever).

⁍⁍ Ctrl+up/down on a highlighted Lora in the prompt box will change its value.

⁍⁍ Ctrl+up/down on a highlighted word or word group will encase it in brackets and add a weight value of :1.1 or decrease it to :0.9.

⁍⁍ Alt+left/right arrows can be used to change the order of your prompt by moving selected LoRAs or words left or right, towards the beginning or the end of your prompt. (*Words must be separated by commas for them to move with alt+up and down arrows).

⁍⁍ Escape - Closes Image Viewer.

⁍⁍ Some tips and browser shortcuts that might also be useful in A1111:

Tips:

⁍⁍ You can drag a png or a jpg to the text2image prompt box to extract the exif data into the prompt box.

⁍⁍ F11 - Full Screen/Exit Full Screen. (More real estate for A1111 on your monitor).

⁍⁍ F3 or Ctrl+F - Find in Page. (Useful to search for repeating words in those really long prompt merges).

⁍⁍ Ctrl+P - Opens Print (Save a pdf of all your prompt settings including a thumbnail).

⁍⁍ +/- on Number Pad - Zoom in/Zoom out (To read small writing in prompt boxes).

⁍⁍ Space - Goes to bottom of page.

⁍⁍ F5 or Ctrl+R - Reload A1111.

點整?你用乜Lora ?

技巧主要係用咗controlNet 控制想要出咩圖,

例如我呢幾張係用咗張全黑嘅圖去做shuffle前20%嘅Steps,等佢有黑背景,

後面50%就用張有粉紅色neon light 嘅圖,等佢角呀,翼呀嘅部份出到啲好似發緊光咁嘅效果。

Lora就好普通嘅succubus Lora。

例如我呢幾張係用咗張全黑嘅圖去做shuffle前20%嘅Steps,等佢有黑背景,

後面50%就用張有粉紅色neon light 嘅圖,等佢角呀,翼呀嘅部份出到啲好似發緊光咁嘅效果。

Lora就好普通嘅succubus Lora。

巴打你下面果兩張圖好正

睇黎要開始研究吓SDXL

睇黎要開始研究吓SDXL

用8x_NMKD-Superscale_150000_G upscaler個個好好多,用refiner個個模糊得黎又太清晰,好唔自然

我鍾意直接gen大圖,只要幅圖長寬比同個主題配合就算用SD1.5直接gen960x1360咁大都少機會出怪物,以下依幾幅直接gen,冇upscale, edit過

我鍾意直接gen大圖,只要幅圖長寬比同個主題配合就算用SD1.5直接gen960x1360咁大都少機會出怪物,以下依幾幅直接gen,冇upscale, edit過

三國武將頭像, pixel art test.

點整透明background?

有幾個extension可以退地, 不過好多a1111 v1.6用唔到

png圖support alpha mask

https://github.com/continue-revolution/sd-webui-segment-anything#readme

png圖support alpha mask

https://github.com/continue-revolution/sd-webui-segment-anything#readme