簡單講下CNN結構,主要由幾款layer組成

1. convolution (提取feature)

2. pooling (feature 簡化)

3. non-linearalize function (提高系統的非線性程度)

4. fully connected/ inner product (傳統ANN)

5. classification/ deconcolution(output最終結果)

以前NN 唔可行因為所有pixel間都要行neuron connection, 10*10 的圖 output 去10*10 的layer就有 100^2 咁撚多的組合,CNN 用左convolution同pooling的技改提取image faeture進行簡化,咁一層layer用10個faeture kernel, 每個kernel 3*3, 咁只係得3*3*10 個neuron要fit, 令到NN 呢個想法實際可行

太複雜啦 等比人屌啦你

太複雜啦 等比人屌啦你

屌你咩已經無得再淺

一係由咩叫kernel,咩係convolution開始講

簡單d就係俾一堆圖(e.g. 車)俾電腦睇,淨係同佢講呢樣野叫車,電腦自動搵"車"的feature,e.g. 有碌,有窗,有bumper, 再深多兩層就人都理解唔到的東西,叫feature vector,搵一大堆數function去描述d 特徵

CNN 同人類局域視角好有關,但我唔想講人類點樣去理解世界呢樣野

可唔可以講詳細少少點用convolution嚟搵feature?

利申:知convolution同kernel乜嚟,講得數學啲都ok

Convolution其實係一個spatial filter, 例如最簡單嘅Sobel kernel:

[1 0 -1;

2 0 -2;

1 0 -1]

就可以搵到圖形的邊界:

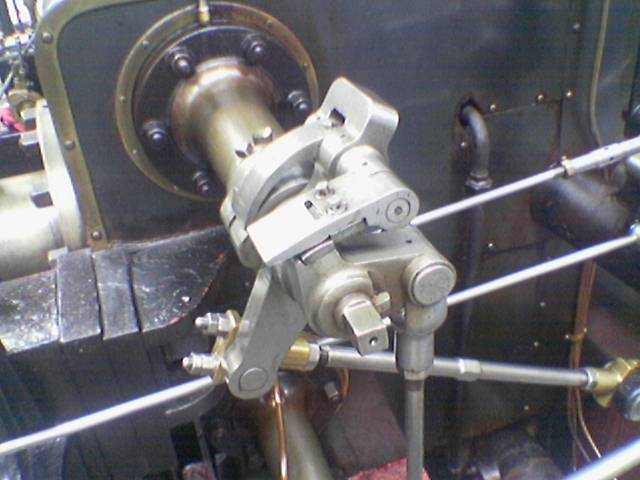

before:

.PNG)

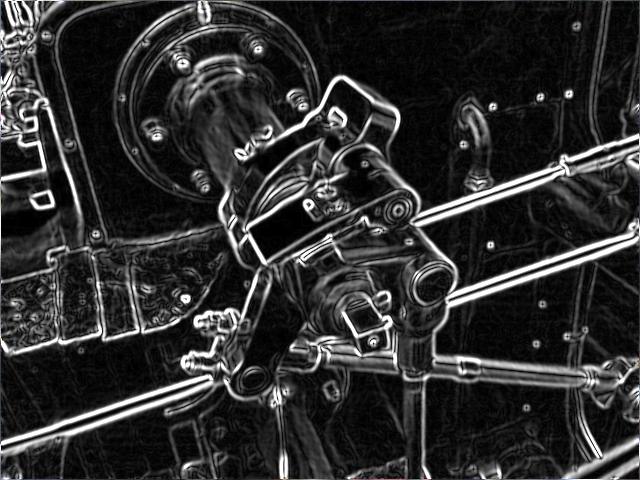

after:

.PNG)

唔同kernel 會產生唔同feature, 所以CNN個kernel都係要由data train出黎。

數學d咁講 low pass filter就係filter走不斷升跌既pattern

咁反觀high pass filter就係filter走太flat(?)既wave, 留返d high perturbation(?) (即係d 幼既線)

只係咁嘅話令我有種用啲高深字眼包裝一啲已知好耐嘅嘢嘅感覺

咁你數學好

你可以google下sift,surf,brief,orb feature既 呢d係cv比較出名既feature extraction

同埋math果邊就好興用TV,應該算係pde approach(?)

Tony Chan佢個model用左個果時黎講好特別既approach所以好出名

Normally segmentation係based on edge咁剪

但係佢就proposed minimize (pixel既intensity - 屬於同一個region既mean intensity) (我都唔係好識講 不過睇返佢果份paper應該難唔到你

太複雜啦 等比人屌啦你

太複雜啦 等比人屌啦你