話說最近真係將self hosted AI帶入工作上

極北鷲

269 回覆

82 Like

41 Dislike

finetune

想問下Self host 的有冇Model 係 冇道德枷鎖嘅版本?

好多野都唔答我

好多野都唔答我

點樣為之假ai

我用llm再fine tune 返自己想要果d有咩分別

我用llm再fine tune 返自己想要果d有咩分別

Me

Use joined github copilot + OpenRouter

Write code use vscode + copilot

如細 project 一開始會用 Cline + OpenRouter API (Sonnet) gen init code

So far ok

Use joined github copilot + OpenRouter

Write code use vscode + copilot

如細 project 一開始會用 Cline + OpenRouter API (Sonnet) gen init code

So far ok

有

去r/localllama search "uncensored"或"abliterated"

去r/localllama search "uncensored"或"abliterated"

Llama-3.3-70B-Instruct

128K context, multilingual, enhanced tool calling, outperforms Llama 3.1 70B and comparable to Llama 405B 🔥

Comparable performance to 405B with 6x LESSER parameters

Improvements (3.3 70B vs 405B):

GPQA Diamond (CoT): 50.5% vs 49.0%

Math (CoT): 77.0% vs 73.8%

Steerability (IFEval): 92.1% vs 88.6%

Improvements (3.3 70B vs 3.1 70B):

Code Generation:

HumanEval: 80.5% → 88.4% (+7.9%)

MBPP EvalPlus: 86.0% → 87.6% (+1.6%)

Steerability:

IFEval: 87.5% → 92.1% (+4.6%)

Reasoning & Math:

GPQA Diamond (CoT): 48.0% → 50.5% (+2.5%)

MATH (CoT): 68.0% → 77.0% (+9%)

Multilingual Capabilities:

MGSM: 86.9% → 91.1% (+4.2%)

MMLU Pro:

MMLU Pro (CoT): 66.4% → 68.9% (+2.5%)

https://www.reddit.com/r/LocalLLaMA/comments/1h85ld5/llama3370binstruct_hugging_face/

128K context, multilingual, enhanced tool calling, outperforms Llama 3.1 70B and comparable to Llama 405B 🔥

Comparable performance to 405B with 6x LESSER parameters

Improvements (3.3 70B vs 405B):

GPQA Diamond (CoT): 50.5% vs 49.0%

Math (CoT): 77.0% vs 73.8%

Steerability (IFEval): 92.1% vs 88.6%

Improvements (3.3 70B vs 3.1 70B):

Code Generation:

HumanEval: 80.5% → 88.4% (+7.9%)

MBPP EvalPlus: 86.0% → 87.6% (+1.6%)

Steerability:

IFEval: 87.5% → 92.1% (+4.6%)

Reasoning & Math:

GPQA Diamond (CoT): 48.0% → 50.5% (+2.5%)

MATH (CoT): 68.0% → 77.0% (+9%)

Multilingual Capabilities:

MGSM: 86.9% → 91.1% (+4.2%)

MMLU Pro:

MMLU Pro (CoT): 66.4% → 68.9% (+2.5%)

https://www.reddit.com/r/LocalLLaMA/comments/1h85ld5/llama3370binstruct_hugging_face/

要幾多張4090先run到

要幾多張4090先run到2x4090 = 48 GB RAM

Llama 70B Q4_K_M 42.52GB

https://huggingface.co/bartowski/Llama-3.3-70B-Instruct-GGUF

Llama 70B Q4_K_M 42.52GB

https://huggingface.co/bartowski/Llama-3.3-70B-Instruct-GGUF

https://ollama.com/blog/structured-outputs

Ollama now supports structured outputs making it possible to constrain a model’s output to a specific format defined by a JSON schema. The Ollama Python and JavaScript libraries have been updated to support structured outputs.

正

Ollama now supports structured outputs making it possible to constrain a model’s output to a specific format defined by a JSON schema. The Ollama Python and JavaScript libraries have been updated to support structured outputs.

正

啱啱好夠

文字控制而家仲係好弱

最後變左都係gen圖再疊字

最後變左都係gen圖再疊字

我目標都唔係丁圖, 係想整D 有意義D 既, 過年 生日卡之類

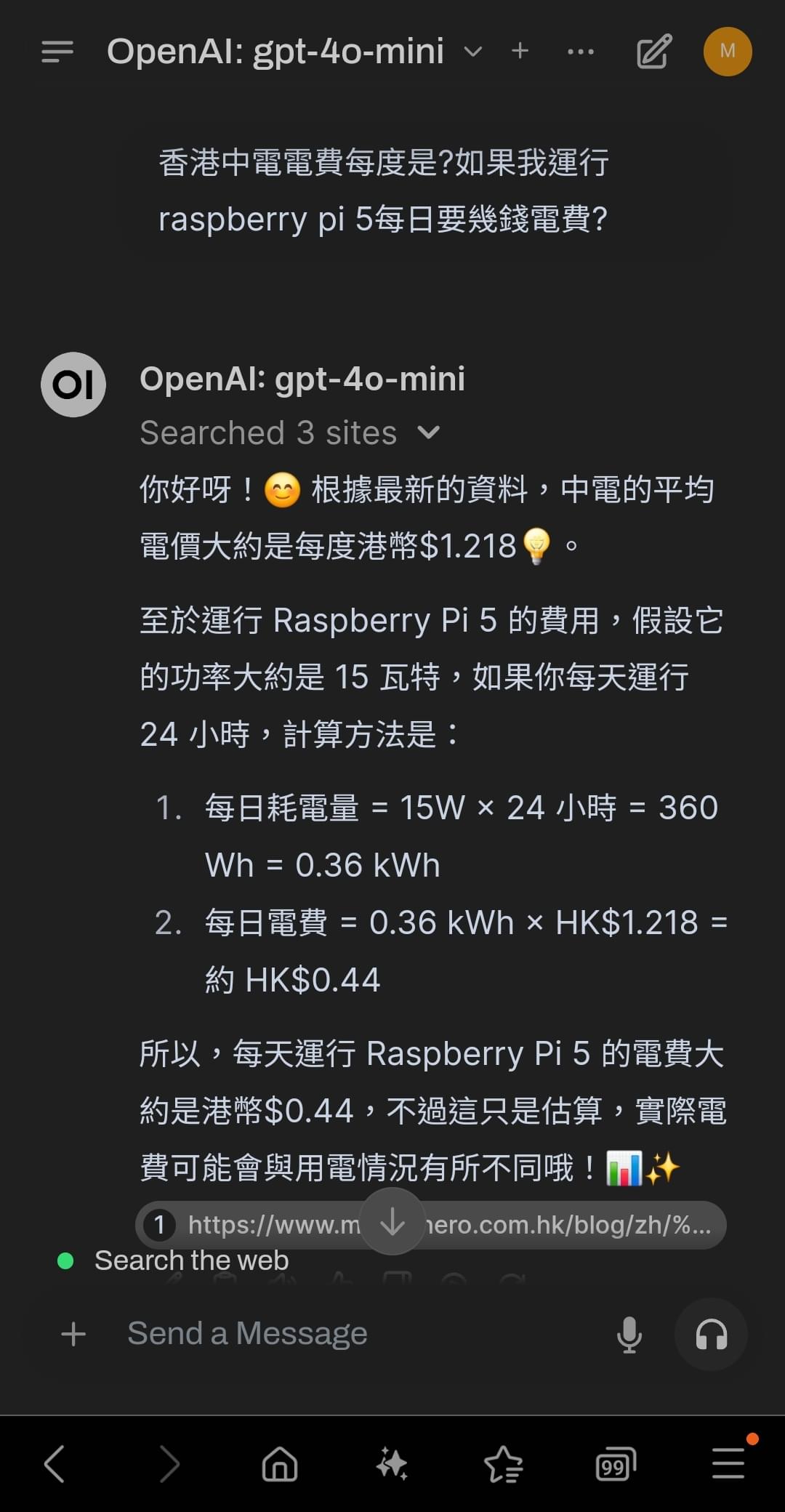

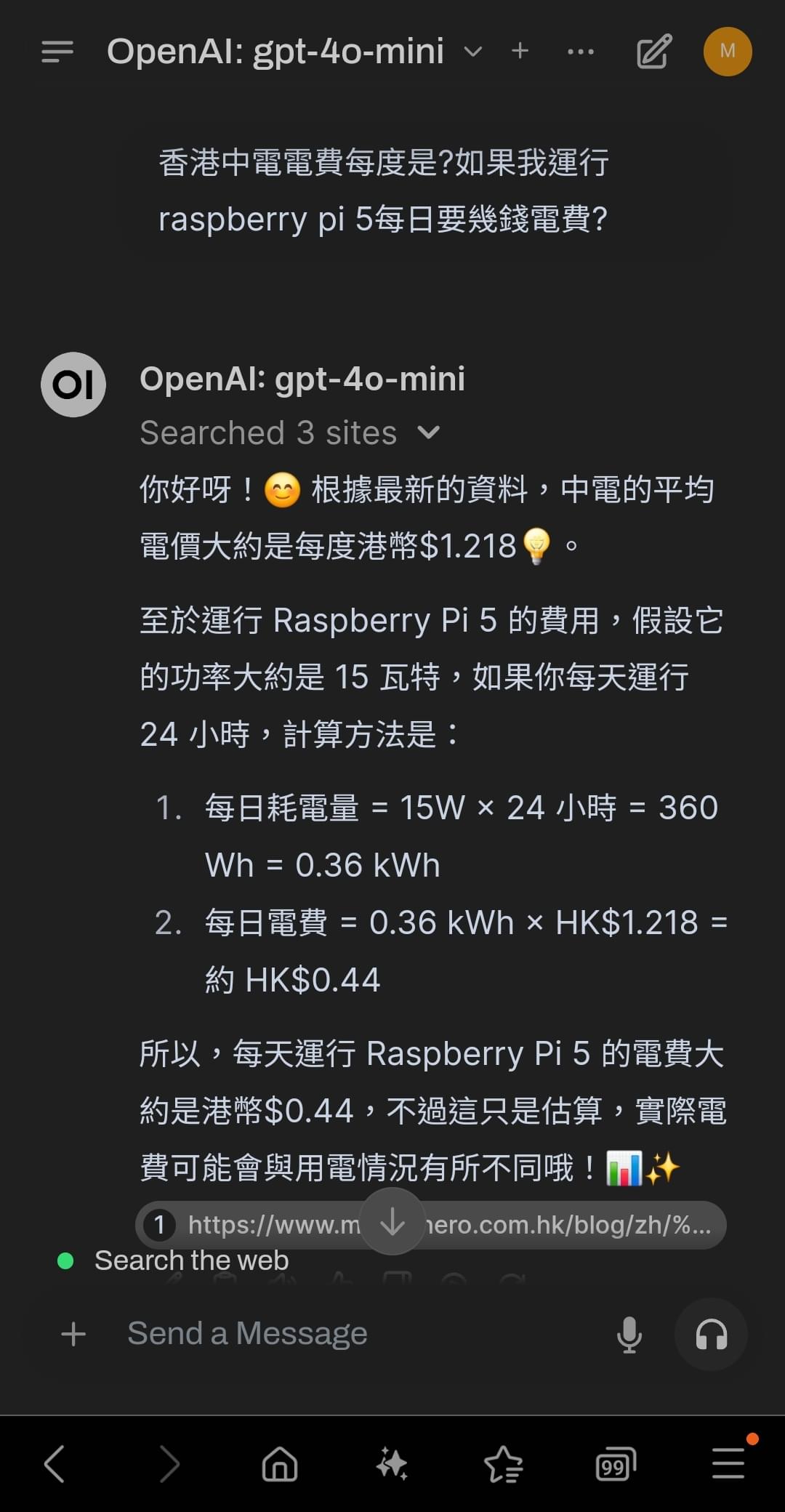

每日既cost

aws ec2 free tier:$0

rpi5電費:$0.4

api cost:$ chatgpt 4o-mini,我每日用$0.5左右

google search json api(,每日100個免費query): $0

唔洗vpn, mobile pc都上到每日收費唔洗1蚊

aws ec2 free tier:$0

rpi5電費:$0.4

api cost:$ chatgpt 4o-mini,我每日用$0.5左右

google search json api(,每日100個免費query): $0

唔洗vpn, mobile pc都上到每日收費唔洗1蚊

唔想資料送中,Deepseek 3而家OpenRouter有得揀Together.ai做inference

似乎今次Deepseek 3條license好似係royality-free,遲啲應該好多host都會可以用。中國佬哩鋪值得一贊

似乎今次Deepseek 3條license好似係royality-free,遲啲應該好多host都會可以用。中國佬哩鋪值得一贊

open source LLM 中國佬直頭屈哂機

大家真係唔使擔心無AI用

大家真係唔使擔心無AI用

搞咁多野,不如比錢用三大嘅API好過喇。

自已host個LLM,有乜野用途?

自已host個LLM,有乜野用途?

差不多是Github Copilot第一批公司客,用咗成年幾。佢係VSCode個integration真係最勁。

中共野,仲要close source,你夠唔夠膽用?

close source死路一條,被迫open source。

close source死路一條,被迫open source。

其實啲鬼佬用來打code,冇人會理佢答唔答到你8964。

佢只要夠平+好,唔open weight都有人用。

而家Deepseek 個API講到明會用你啲data去train,Reddit啲人一樣照用

佢只要夠平+好,唔open weight都有人用。

而家Deepseek 個API講到明會用你啲data去train,Reddit啲人一樣照用

因為文字多, ARM 架構 個vram 係打通,所以高過3080 嘅10gb.

公司野驚data leakages, local llm +RAG rap,up 完先pass 比claude api revamp answer.

出左jetson nano super 都改變左生態.

係咪得我一個唔鍾意blog thumbnail用ai圖

硬係唔知點咁

硬係唔知點咁

而家已經痴哂線,最難頂係嗰堆YouTube AI product rumor video,完全9 up,錯到七彩

我最唔鍾意係 ai 播新聞

哂9氣

哂9氣